HFES Health Care Symposium 2025: Pushing Boundaries and Questioning Traditions

by Katie Curtis

User Groups and Sample Sizes

Standard home use combination products (e.g., autoinjectors, pre-filled pens, and pre-filled syringes) have traditionally had three distinct user groups: patients, lay caregivers, and healthcare providers. In recent years, the Food and Drug Administration (FDA) has requested that manufacturers sub-divide user groups based on other characteristics, such as injection experience and training status, requiring 15 distinct participants for each sub-group. These requests have exploded HF validation study sample sizes from 45 participants to 100+ participants, posing financial and logistical challenges for manufacturers.

Researchers from Takeda and PA Consulting set out to investigate how key study design aspects (i.e., training) and participant characteristics (i.e., injection experience) impact objective performance to determine whether these permutations and combinations of user groups add value to validation studies.

Preliminary statistical analysis showed that training is associated with decreased use error rates while injection experience is associated with increased use error rates. These findings are not groundbreaking – it’s logical that adding training as a risk mitigation will improve objective performance, and injection experienced participants often experience additional use errors due to negative transfer or existing mental models. However, the team hypothesized that in some cases, sample sizes could be decreased by 20-40% per sub-group while still affording researchers the opportunity to observe the same variety of use error types.

The traditional 15 participant per user group sample size requirement is based on research conducted by Faulkner (2003) that suggested a sample size of 15 people was sufficient to find a minimum of 90% and an average of 97% of all problems with a timesheet software.[1] The HFES presenters’ research focused on whether the sample sizes for each user group sub-group could be less than 15. This is an important area of research given that Faulkner’s foundational study of usability sample size was performed more than 20 years ago with timesheet software on a desktop computer – not a medical or drug delivery device. Think how far computers have come in the past 20+ years, not to mention medical technology.

However, given that 15 has been the “magic number” for usability study sample sizes for many years, it will take time to generate enough evidence to support a paradigm shift. In the meantime, we are addressing this problem from a different angle – namely, by minimizing the number of discrete sub-groups that are required.

For example, consider the variable of injection experience. For a combination product, we typically expect that patient and caregiver user groups will consist of a mix of injection experienced and injection naïve participants. If we apply the “magic number 15” across all sub-groups, our sample size would include 60 lay participants – 15 each of injection experienced and injection naïve patients and caregivers.

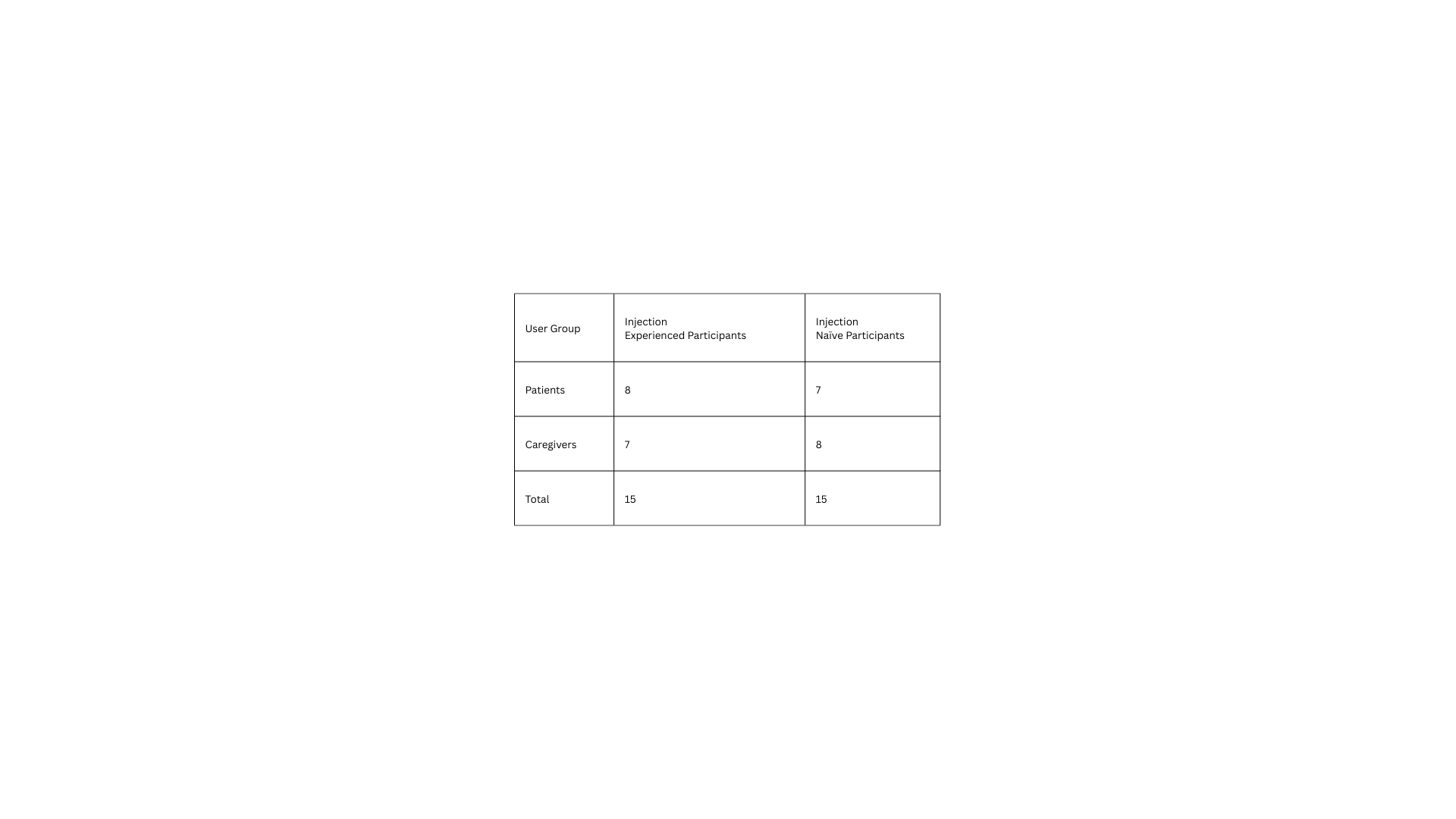

If we think more critically about the effect of injection experience, it should impact patient and caregiver performance similarly, e.g., by causing additional use errors due to negative transfer or existing mental models. Thus, it is reasonable to use a matrixed approach in which half of the patient participants (approximately 8) and half of the caregiver participants (approximately 7) are injection experienced to reach a total of 15 injection experienced participants and likewise with the injection naïve participants. Thus, we can recruit a total of 15 injection experienced and 15 injection naïve participants across both user groups, as shown in the following table.

At Design Science, we’ve justified this approach to the FDA and successfully limited sample sizes to 30 participants rather than 60 participants in such cases.

In our experience, the FDA is generally receptive to logical rationales. While we await further research on sample sizes for HF validation studies, we encourage you to push sample size discussions with the Agency – if you have the time and opportunity to do so (e.g., in a pre-submission) – by proposing study designs that evaluate the relevant range of user characteristics while utilizing resources effectively.

Platform Device Testing

We’ve all heard the saying that the definition of insanity is doing the same thing over and over and expecting different results. At this year’s conference, multiple presenters commented on the madness of executing HF validation studies for every single new combination product that leverages the same platform device. We all know and love platforms like the YpsoMate autoinjector, Molly® autoinjector, and BD UltraSafe Plus™. But after writing dozens of validation reports for the same devices, you start to feel a little… insane.

Platform device manufacturers invest time and effort to develop and achieve FDA approval for platform devices. These platforms have subsequently been validated with many different types of users for various indications, from cardiovascular disease to rheumatoid arthritis and everything in between. For example, as of 2024, 100M+ units of the YpsoMate have been sold with 20+ different drugs.[2] “When is enough, enough?” the Eurofins Human Factors MD team mused.

The 2024 FDA draft guidance on use-related risk analyses (URRAs)[3] added fuel to the fire. This guidance outlines a pathway for manufacturers to scale their HF efforts using a risk-based approach, in some cases even foregoing HF validation testing – if justified by a strong rationale (check out our blog post to learn more about the 2024 URRA guidance).

The FDA often provides feedback that “HF data” is required to support a manufacturer’s submission. At this year’s conference, we were reminded that “HF data” is not synonymous with “HF validation study results”. In some cases, alternative supporting data may be sufficient. Presenters suggested various methods to generate alternative HF data, which were consistent with the 2024 URRA guidance (e.g., creating comprehensive URRAs, researching known use problems, conducting comparative analyses with similar products, and searching publicly available FDA reviews for similar products using the Drugs@FDA database).

Since the Food and Drug Administration Modernization Act of 1997, Congress has directed the FDA to take the least burdensome approach to premarket evaluations. The FDA’s Least Burdensome Provisions guidance defines least burdensome as, “the minimum amount of information necessary to adequately address a relevant regulatory question or issue through the most efficient manner at the right time.” [4] There was palpable excitement in Toronto at the promise of pursuing the “least burdensome” approach to generate HF data for combination products that leverage well-studied platform devices. Is now the “right time” to challenge the status quo and push for more efficient HF strategies for platform-based combination products?

Knowledge Task Methods

I’m not afraid to say it – I hate knowledge tasks. They’re boring to ask participants, uninteresting to analyze, and difficult to write (well, good ones, at least – poorly written knowledge tasks are a dime a dozen). Judging from the laughter in the audience during the conference, I sense I’m not the only one who feels this way.

In their most recent HF guidance, the FDA defines knowledge tasks as, “those tasks that involve assessing information provided by the labeling,” [5] yet the Agency considers it “leading” if moderators explicitly direct participants to use the labeling to answer knowledge questions. But if participants never look at the labeling… then what are we evaluating? Allowing participants to answer knowledge assessments based on experience is unhelpful because it only teaches us that labeling mitigations are not effective if people don’t read the instructions. Any HF engineer could tell you that without running a validation study.

Various suggestions were presented to improve knowledge assessment methods, from modifying the wording of questions (e.g., stating, “According to the manufacturer…” at the beginning of every question) to refining the flow of the root cause discussion to more thoroughly evaluate labeling comprehension.

One exciting contribution to the discussion presented by Molly Story of Human Spectrum Design was a snapshot of the eagerly anticipated 2025 release of ANSI/AAMI HE75, which is expected to recommend the following approach when discussing incorrect responses with participants:

“…ask a series of follow-up questions to determine the possible factors contributing to the incorrect response, using a process of progressive prompting as follows and in this order:

Record the response as incorrect.

Determine why the participant answered as they did.

Determine what the participant would do in real life if they wanted to know that information.

Determine whether the participant was aware that some of the materials in the room (i.e., the labeling) might provide the information they needed to answer the question.

Invite the participant to look at the element of labeling – but not the specific section of that labeling – that contains the information needed to answer the question correctly.”

Many strong moderators already use these techniques. However, having a standard approach for knowledge task follow-up will drive consistency in the HF industry and (partially) alleviate our collective frustration with these tricky tasks.

Looking Ahead

The 2025 HFES Health Care Symposium reminded us that challenging tradition is essential for growth. Whether it’s re-evaluating sample sizes, re-thinking platform device testing, or refining knowledge task methods, the human factors community is clearly ever-evolving. Conversations like these are helping us to shape the future of how we design, evaluate, and commercialize healthcare products that work for real people in real-world contexts.

At Design Science, we’re proud to be part of that future. As your human factors partner, we bring deep experience, scientific rigor, and practical insight to every phase of medical and drug delivery device development. If you’re rethinking your HF strategy – or ready to push a few boundaries of your own – we’d love to talk.

Let’s make it make sense, together.

[1] Faulkner, L. (2003). Beyond the five-user assumption: Benefits of increased sample sizes in usability testing. Behavior Research Methods, Instruments, and Computers, 35(3), 379-383.

[2] Thompson I, “Ypsomed’s Platform Product Transformation and Approach to Strategic Partner Networking for Self-Injection Devices”. ONdrugDelivery, Issue 163 (Jul 2024), pp 24–28.

[3] FDA Draft Guidance for Industry and FDA Staff: Purpose and Content of Use-Related Risk Analyses for Drugs, Biological Products, and Combination Products (2024)

[4] FDA Final Guidance for Industry and FDA Staff: The Least Burdensome Provisions: Concept and Principles

[5] FDA Draft Guidance for Industry and FDA Staff: Purpose and Content of Use-Related Risk Analyses for Drugs, Biological Products, and Combination Products (2024)

Share this entry